Audio power levels on E1/T1 timeslots: the digital milliwatt

Posted October 10th 2009

Sometimes, you want to know when the audio on an E1/T1 timeslot has gotten louder than some limit. In a voice mail application, that's useful for catching mistakes such as a subscriber leaving a message but then not hanging up the phone properly—you don't want to record hours and hours of silence. In an IVR application, you might want to keep an eye on the audio level so that a frustrated (shouting!) subscriber can be forwarded to a human operator.

GTH provides a "level detector" to do that sort of thing. You start a level detector on a timeslot, give it a loudness threshold, and it'll notify you whenever the audio on the timeslot goes over that threshold. Here's an example command which notifies you if the power on timeslot 13 of an E1/T1 is louder than -20dBm0:

<new><level_detector threshold='-20'>

<pcm_source span='2A' timeslot='13'/>

</level_detector></new>

The algorithm is:

- Take a 100ms block of audio (800 samples)

- Square all the samples

- Sum the squares

- If the sum exceeds the loudness threshold, send an XML event

There are some details to worry about.

The digital milliwatt

The threshold, e.g. -20 in the example above, has to be relative to something. The standard reference power level in telecommunications is the milliwatt. ITU-T G.711, table 5 and 6 defines the sequences which represent a milliwatt:

A-law: 34 21 21 34 b4 a1 a1 b4

μ-law: 1e 0b 0b 1e 9e 8b 8b 9e

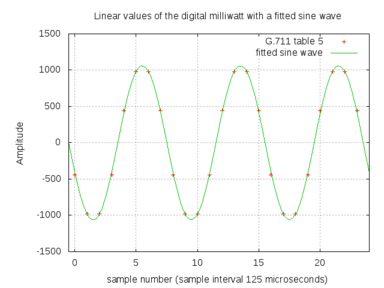

Here's what a few periods of the digital milliwatt look like:

In this post, the unit 'dBm0' means power, in dB relative to the digital milliwatt, as defined by the sequences above. If you have no idea what dB means, wikipedia has a decent article.

What's the loudest possible sound on a timeslot?

170 is the highest value possible in A-law encoding. It corresponds to linear 4032. That's about 6dB louder than the digital milliwatt.

85 is the smallest value possible in A-law encoding. It corresponds to linear -1. That's about 66dB softer than the digital milliwatt.

The range -66dBm0...+6dBm0 sets an upper bound on the range of power on a timeslot. Then there are other things which further limit the practical range, so you're unlikely to actually use a +6dBm0 threshold in practice, but it's there if you want it.

Is the G.711 definition the best one?

The sequence given in G.711 is a 1kHz sine wave. The sampling rate on E1/T1 is 8kHz, so the reference sequence can be expressed in just eight values. That's nice, but that also leads to small errors, about 0.13dB, because of quantisation.

ITU-T O.133 discusses that problem in detail and proposes a test signal which specfically is not 1kHz (i.e. not a submultiple of the sampling rate). For most practical purposes, 0.13dB doesn't matter and so the simple and robust thing to do is to use the well-defined and well-known G.711 sequence as a reference.

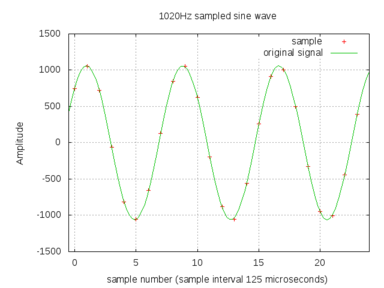

Here's what a few periods of a 1020Hz signal look like. Notice that the samples, i.e. the red crosses, don't appear in the same spot one period later---that way we don't get the same errors over and over again.

Testing pitfall: the .wav header

GTH players plays raw A-law or μ-law data. If you feed a player a .wav file in 8kHz A-law, or μ-law if your network uses μ-law, there will be a very short bit of noise at the start of the playback because the .wav header gets treated as though it were audio.

When testing level detection, especially at quiet levels, that header noise is enough to trigger a detector. Here's a .wav of a 1000Hz sine wave at about -30dBm0:

00000000 52 49 46 46 42 27 00 00 57 41 56 45 66 6d 74 20 |RIFFB'..WAVEfmt | 00000010 12 00 00 00 06 00 01 00 40 1f 00 00 40 1f 00 00 |........@...@...| 00000020 01 00 08 00 00 00 66 61 63 74 04 00 00 00 10 27 |......fact.....'| 00000030 00 00 64 61 74 61 10 27 00 00 d5 c4 f5 f1 f3 f1 |..data.'........| 00000040 f5 c4 d5 44 75 71 73 71 75 44 d5 c4 f5 f1 f3 f1 |...DuqsquD......| * 00002740 f5 c4 d5 44 75 71 73 71 75 44 |...DuqsquD|

The first 58 octets (bytes) are the header. If we turn that header into a periodic signal, it's at about -8dBm0, which is fairly loud. With the default period parameter of 100ms in the level detector, that'll cause a false level of about -11dBm0.

The period parameter

The level_detector has an optional parameter, the period. The period sets the size of the audio block the GTH considers when measuring the power. A short period makes the GTH responsive to sudden changes in power on the timeslot, which would be useful in an application such as figuring out which of the people in a conference call are currently talking. A long period averages out the power over a longer time, which is useful in deciding whether a voicemail recording has finished.

The default is 100ms.

Sample files

This .zip file contains sample recordings with 1kHz sine waves at 0, -10, -20, -30, -40 and -50 dBm0. The .wav versions are useful for listening to or importing into an audio editing program to calibrate the level meter. The .raw versions are just plain A-law samples---you can use them with a GTH player.

Permalink | Tags: GTH, telecom-signalling